Understanding and improving the factuality of responses generated by large language models (LLMs) is critical in artificial intelligence research. The domain investigates how well these models can adhere to truthfulness when answering open-ended, fact-seeking queries across various topics. Despite their advancements, LLMs often need to work on generating content that does not contain factual inaccuracies as it poses significant reliability issues in real-world applications where accurate information is paramount.

Existing approaches to assessing the factuality of model-generated content typically rely on direct human evaluation. While valuable, this process is inherently limited by human judgment’s subjectivity and variability and the scalability challenges of applying human labor to large datasets or models. Consequently, there exists a need for more automated and objective methods to assess the accuracy of information produced by LLMs.

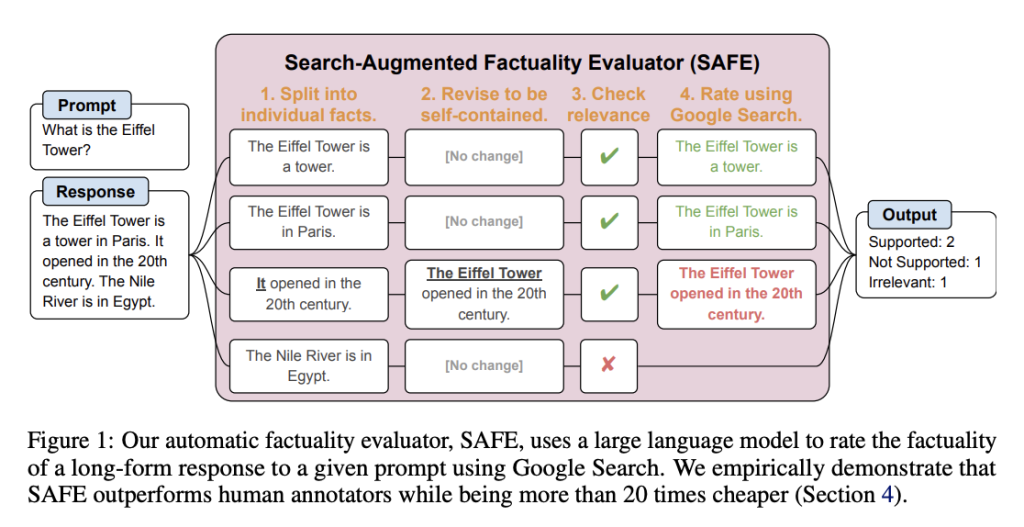

Researchers from Google DeepMind and Stanford University have introduced a novel automated evaluation framework named the Search-Augmented Factuality Evaluator (SAFE). This framework aims to tackle the challenge of assessing the factuality of content generated by LLMs. By automating the evaluation process, SAFE presents a scalable and efficient solution to verify the accuracy of information produced by these models, offering a significant advancement over the traditional, labor-intensive methods of fact-checking that rely heavily on human annotators.

The SAFE methodology comprehensively analyzes long-form responses generated by LLMs by breaking them down into individual facts. Each fact is then independently verified for accuracy using Google Search as a reference point. Initially, the researchers used GPT to generate LongFact, a dataset comprising approximately 16,000 facts drawn from diverse topics. This process involves a sophisticated multi-step reasoning system, which evaluates the support for each fact in the context of search results. SAFE was applied across thirteen language models spanning four model families, including Gemini, GPT, Claude, and PaLM-2, to evaluate and benchmark their factuality performance. This detailed approach ensures a thorough and objective assessment of LLM-generated content.

The effectiveness of SAFE is quantitatively affirmed when its evaluations align with those of human annotators on 72% of around LongFact’s 16,000 individual facts. In a focused analysis of 100 contentious facts, SAFE’s determinations were correct 76% of the time under further scrutiny. The framework also demonstrates its economic advantages, being more than 20 times less expensive than human annotation. Benchmark tests across thirteen language models indicated that larger models, such as GPT-4-Turbo, generally achieved better factuality, with factual precision rates reaching up to 95%. SAFE offers a scalable, cost-effective method for accurately evaluating the factuality of LLM-generated content.

To conclude, the research introduces SAFE, an innovative framework developed by researchers from Google DeepMind and Stanford University to assess LLMs’ accuracy. SAFE’s methodology employs Google Search to verify individual facts in LLM responses, showing high alignment with human assessments. By providing a scalable, cost-efficient method for factual evaluation, this research significantly advances the field of AI, enhancing the trustworthiness and reliability of information produced by LLMs.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

![]()

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in Materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in Material Science, he is exploring new advancements and creating opportunities to contribute.